Large Language Models (LLMs) have advanced in almost every domain, ranging from healthcare and finance to education and social media. Clinicians in the medical industry rely on a wide variety of data sources to deliver high-quality care. Modalities such as clinical notes, lab results, vital signs and observations, medical photographs, and genomics data are included in this category of information. Though there have been constant developments in the field of biomedical Artificial Intelligence (AI), the majority of AI models in use today are restricted to working on a single job and analyzing data from a single modality.

The well-known foundation models offer a chance to completely transform medical AI, and these models are adjusted to different activities and environments through in-context learning or few-shot fine-tuning since they are trained on enormous volumes of data utilizing self-supervised or unsupervised learning objectives. Unified biomedical AI systems that can understand data from several modalities with complicated structures to handle a variety of medical difficulties are now being developed. Such models are anticipated to have an impact on everything from basic biomedical research to patient treatment.

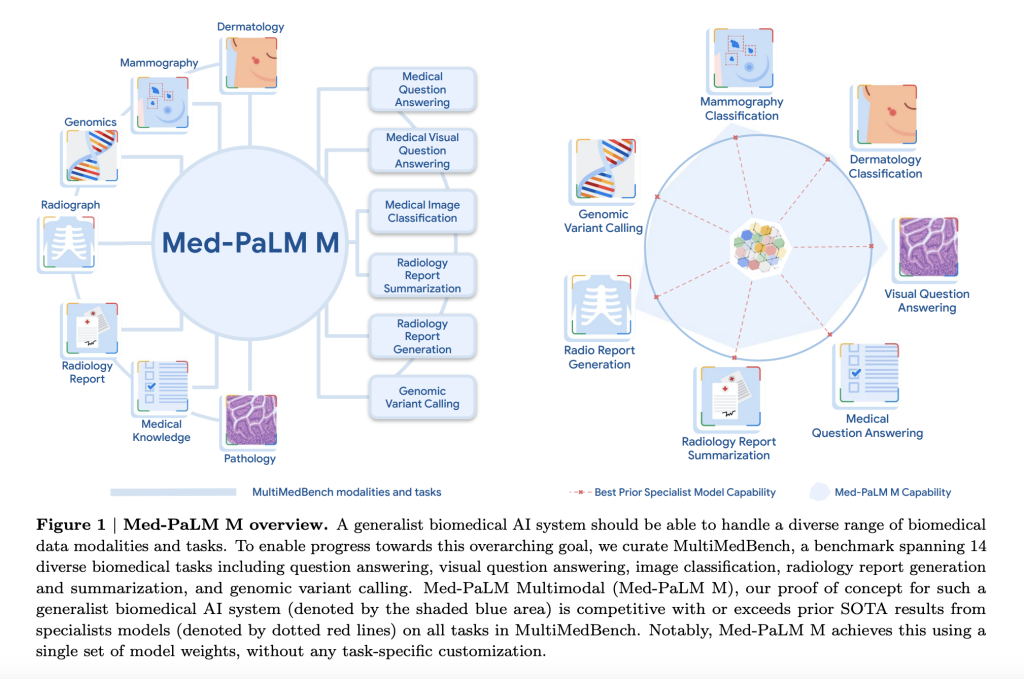

Researchers have been putting in efforts towards creating a general-purpose biomedical AI system. To facilitate the development of these generalist biomedical AI systems, a team of researchers from Google Research and Google DeepMind have introduced MultiMedBench, a unique benchmark made up of 14 different biomedical activities, to aid in the development of these biomedical AI systems. These activities cover a range of difficulties, including answering medical questions, analyzing dermatological and mammography images, creating and summarising radiology reports, and identifying genomic variations.

Join the fastest growing ML Community on Reddit

The authors have also provided a proof of concept called Med-PaLM Multimodal (Med-PaLM M), a sizable multimodal generative model that can understand and encode many kinds of biomedical data, such as clinical language, medical pictures, and genetic data, with a variety of different levels of flexibility. When compared to cutting-edge models, Med-PaLM M has achieved competitive or even higher performance on all tasks covered in the MultiMedBench assessment. Med-PaLM M even performed noticeably better in many cases than specialized models.

The team has also shared some unique Med-PaLM M capabilities. They have given evidence of the model’s capacity for positive transfer learning across tasks and zero-shot generalization to medical ideas and tasks. The AI system exhibits an emergent capacity for zero-shot medical reasoning, which means it can make decisions regarding medical situations for which it was not specifically trained. Despite these encouraging results, the team has stressed that additional work needs to be done before these generalist biomedical AI systems can be used in practical settings. Still, the published results mark a considerable step forward for these systems and offer encouraging possibilities for the future creation of AI-powered medical solutions.

The team has summarized the contributions in the following way.

The work demonstrates the potential of generalist biomedical AI systems for medical applications, though access to extensive biological data for training and validating in-use performance continues to be a problem.

MultiMedBench has 14 unique tasks encompassing a range of biomedical modalities. Med-PaLM M, the first multitasking generalist biomedical AI system, has been introduced that does not require task-specific modifications.

The AI system demonstrates emergent abilities, such as generalization to new medical concepts and zero-shot medical reasoning.

Potential clinical utility is indicated by a human review of Med-PaLM M’s outputs, particularly in producing chest X-ray reports.

With low average mistakes, radiologists favor Med-PaLM M reports over radiologists’ reports in up to 40.50% of cases.

Check out the Paper. All Credit For This Research Goes To the Researchers on This Project. Also, don’t forget to join our 27k+ ML SubReddit, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

The post Meet Med-PaLM Multimodal (Med-PaLM M): A Large Multimodal Generative Model that Flexibly Encodes and Interprets Biomedical Data appeared first on MarkTechPost.